The Four Types of Dev Environments

Sample Chapter

Pai Mei brought Kiddo to the standing desk. On the desk was a brand new computer. Kiddo tried to install the latest version of NodeJS following Pai Mei’s written instructions. When it failed, she turned to her master. Pai Mei only frowned.

Kiddo, unsure how to proceed, said “Master, how am I to install software onto my computer?”

Pai Mei, enraged, smashed her knuckles with the end of his staff. “Your computer?”

Cradling her bruised hand, Kiddo cried: “Master, is it not mine?!”

Pai Mei, still enraged, again smashed Kiddo’s hand with his staff. After a single stroke of his long, white beard, he simply grumbled and glared at Kiddo.

Kiddo’s eyes dropped as she looked back at the computer. Her gnarled fingers could barely enter the search terms into the operating system vendor’s support site. In that moment, she was enlightened.

While there are actually an infinite number of dev environments, any given setup can be categorized along two axes, to give us four broad categories. This chapter explains why one of these is superior to the others.

First, a dev environment is categorized based on the instructions for setting it up: documentation on one side and automation on the other. I’d bet most environments you’ve used were heavy on documentation and light on automation.

The second axis relates to how the environment is run. Is it native—running everything directly on the developer’s workstation—or virtual—running in the cloud or a virtual environment?

What we’re building in this book is the best of the four options: automated and virtualized. Let’s talk through why automation is better than documentation and virtualization is better than native.

The Best Documentation is an Executable Script

Documentation is cheap to produce, especially if it isn’t maintained or well-written. It’s often better than nothing, so most teams start their journey to a sustainable dev environment with a Markdown file or a wiki that outlines what you need to do to get set up. This does not scale. At all.

It’s extremely hard to write good documentation. It’s harder when what you are documenting is complex, which happens when your software installation system has to accommodate several package managers, operating systems, hardware architectures, and Pat, who insists on building everything from source.

Of course, even if you could achieve this, how is this documentation maintained? For dev environments, the new hires are usually charged with updating it when they find it doesn’t work. Over time, the steps become so convoluted that not even the most conscientious person can follow them.

Automation solves this. Automation shows exactly what has to happen because it makes it happen. Automation either works or doesn’t. Even though automation feels expensive to produce, it saves time the more it’s used.

Automation has two further advantages. First, developers already possess the skills to produce it, whereas they may or may not be good at writing. Second, automation can be tested. A script that sets up a working dev environment can be used to setup an environment for continuous integration, thus ensuring that the team is aware of issues quickly, and can fix them just as quickly.

Our Computers are Increasingly Not Under Our Control

If you happen to be a Ruby developer who uses a Mac, you’ve no-doubt experienced the yearly problem when macOS releases an update and you can no longer install Ruby. Macs have long-since stopped shipping with a reasonable version of Ruby, and you certainly can’t install gems (Ruby’s form of third-party libraries) without breaking something.

This is not something unique to Apple. Every OS vendor, in their quest for stability, will take great strides to prevent changes to what is considered the "system software". If some script depends on a particular version of Perl, and you change that version, you could break the operating system.

Of course, it’s not just the operating system vendor. Many companies have IT and security teams tasked with preventing security incidents. A critical tool in doing so is to force operating system and software updates to the employees. These teams aren’t always capable or incentivized to work with developers to ensure such updates won’t impact their ability to work. Even if they did, at the end of the day, security updates are going to be more important.

The reality is that we don’t really own all the software on our computers, and that we can’t easily understand how the various libraries and tools that come with it are affected by the libraries and tools we need to do our work.

Virtualization solves this. As long as your computer can run the virtualization software, you can run a virtual machine configured exactly how you like, and it won’t change out from under you. And your entire team can use that exact same version, even though said team might be using a myriad of different computers and operating systems.

Virtualization does come with potentially worse performance than running natively, but this is a worthwhile trade-off (and the performance gap is always shrinking). I would be willing to bet that the time spent waiting for slightly slower tests is far outweighed by the time saved not wrestling with some arcane compiler flags every time something changes in your OS.

Automation and Virtualization Lead to Sustainability

An automated dev environment, based on virtualized operating systems, provides a solid foundation for building just about any app. The automation is never out of date, and the operating system can be kept stable.

Eschewing virtualization requires automating the set up of a developer laptop. While this is better than a documentation-based approach, it’s still highly complex. The automation must account for all operating systems and hardware.

When automating developer workstation setup, the team must either maintain that system themselves or rely on a third party. Whatever preconceived notions you may have about Docker, I can assure you that it’s simpler to have Docker install software than to write a script that must work across many different OSes and hardware profiles.

As for third party solutions, they have to get installed themselves and the team must understand how they work to debug or enhance them. This turns out to be more difficult than learning a commonly-used tool like Docker. We’ll talk about this in the chapter “Tech Companies Should Not Own Your Dev Environment”.

On the other side, using a virtualized environment with documented instructions can be helpful, but you still fall victim to the trappings of documentation. Your docs might be simpler, since they can address the virtualized environment only, but they will still fall out of date.

We’re going to use Docker for virtualization, and a combination of Docker and Bash for automation. The reasons have to do with a hidden, third axis: how easy is it to understand the abstractions on which your dev environment is built?

You Must Always Understand One Level Below the Current Abstraction

The best abstractions are borne from repeated applications of a technology for a well-defined use-case. Writing assembly language gets tiresome, so C was invented. Even though assembly can do far more than C, for most common use-cases, C is much faster and easier to use. It’s a great abstraction.

If you learn C and not assembly, you will eventually hit a limit. You won’t know exactly what problem C was created to solve and, eventually, there will be a problem that your knowledge of C alone cannot solve. You will need to learn a bit of assembly.

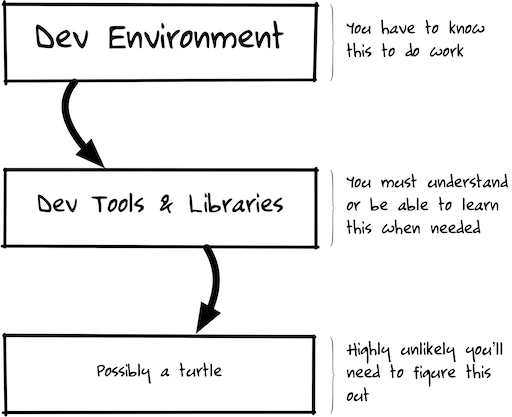

Your dev environment is the same way. Whatever mechanism you use to manage it, it is ultimately an abstraction on top of other technologies that are being orchestrated to manage your environment. When something goes wrong—either due to a bug or an unforeseen use case—you’ll need to pop the hood and see what’s under there.

Thus, you need to understand—or be able to get an understanding of—whatever your dev environment is built on, as shown below.

What this means is that your dev environment should use technologies that you either do, or can, understand. Applying this to your team, this also implies that there is more value in using commonly-understood, battle-tested technologies than in using something that might tick off more features but is more esoteric or less likely to continue to exist past its next round of funding.

Docker—despite being VC-funded itself—is prolific. A lot of people understand it, and it’s not going anywhere. There are even competing products that can build Docker images and run Docker containers.

And Bash...well...Bash will outlive us all. The only better investment in your career than learning Bash is learning SQL and if there were a way to automate all this with SQL, I’d definitely be considering it.

To that end, we’ll now start our journey to learn this stuff. It’s not going to take too long. We’ll start with Docker, which, despite some warts and a few design flaws, is the best tool for the job of virtualizing our dev environment.